In fact, nearly 74% of participants in a recent survey view AI-powered threats as a significant challenge for their organizations.

As AI systems become embedded in critical workflows, it has also opened the door for attackers to launch malicious mass attacks.Successful AI deployments depend on strong foundations, such as quality data, reliable algorithms, and safe real-world applications. But the same features that make AI powerful can also expose it to manipulation.

To stay secure, organizations must strengthen every layer of their AI systems. This means protecting data pipelines, verifying training sets, testing algorithms against attacks, and monitoring model behavior in real time. Much of this area is still uncharted, and traditional cybersecurity tools alone are not enough.

A quick look at AI-driven cyber threats

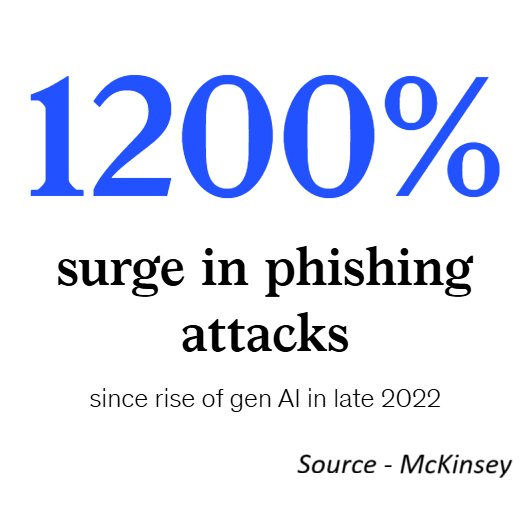

AI is not only transforming how organizations operate but also how attackers launch and scale cyberattacks. Unlike traditional threats, AI-driven cyber threats use artificial intelligence to automate, enhance, or disguise malicious activity. These attacks are faster, more convincing, and harder to detect than their conventional counterparts.

Some of the most pressing AI-driven cyber threats include:

- AI-Powered Social Engineering – Using AI to craft personalized phishing emails, voice calls, or text messages that mimic trusted contacts with near-perfect accuracy.

- Deepfakes – Generating realistic audio, video, or images to impersonate executives, employees, or public figures for fraud, disinformation, or reputation damage.

- AI Voice Cloning – Replicating a person’s voice with striking accuracy to impersonate executives, family members, or colleagues. Attackers use this technique in phone scams and business email compromise schemes to trick victims into transferring money or sharing sensitive information.

- Automated Hacking – Leveraging AI to scan for vulnerabilities, guess passwords, or evade security systems at a scale human attackers cannot match.

- AI Password Hacking & Credential Stuffing – Using AI tools to analyze massive password leaks, generate realistic password candidates, and automate brute-force attacks, making unauthorized access easier than ever.

- Malware Evasion – Using AI to dynamically adapt malicious code so it avoids detection by traditional security tools.

- Disinformation Campaigns – Deploying AI-generated content at scale to mislead the public, manipulate markets, or destabilize organizations.

The implications of these threats go well beyond technical disruptions. Security risks range from data breaches and financial fraud to ethical dilemmas and complex vulnerability management. As AI adoption grows, attackers are weaponizing the same technology to create more sophisticated and harder-to-stop attacks.

That’s not all – AI is prone to inherent challenges

AI-driven attacks are on the rise. On the other hand, there are also several inherent challenges and weaknesses within the AI landscape that expose organizations to risk. Beyond external threats, these internal gaps highlight why securing AI is still uncharted territory for many businesses.

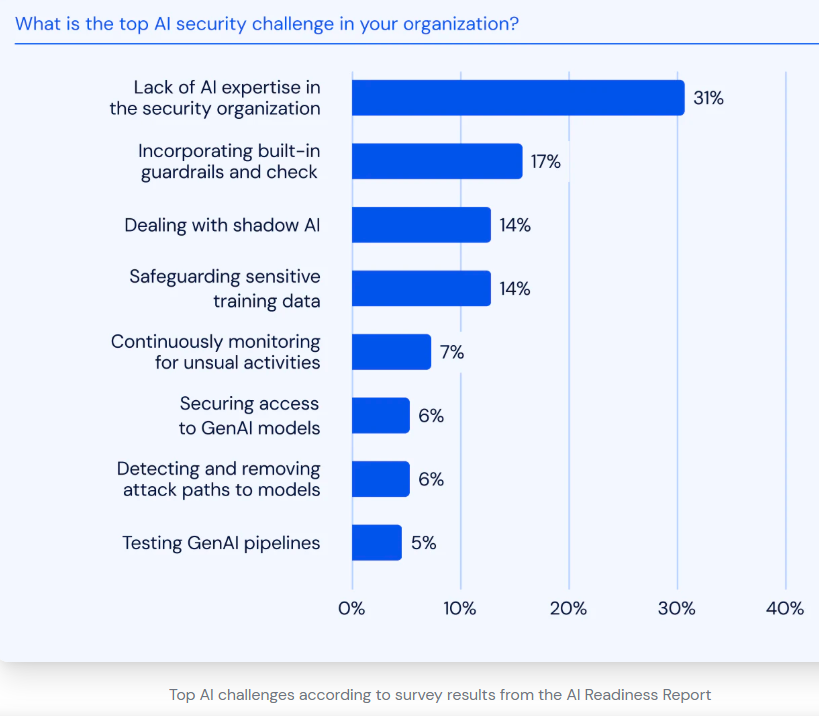

Here are some of the top challenges security leaders are facing today:

1. Lack of AI Expertise in the Security Organization

The biggest barrier is the talent gap. Many security teams lack professionals with deep expertise in AI and machine learning. Without the right skills, teams struggle to recognize vulnerabilities in AI systems, assess risks properly, or deploy defenses against adversarial techniques. This leaves organizations over-reliant on external vendors and unprepared for fast-moving threats.

2. Incorporating Built-in Guardrails and Checks

Unlike traditional software, AI models don’t always follow deterministic rules. Embedding guardrails—such as ethical constraints, bias checks, and output filters—is complex and resource-intensive. Failure to integrate these safety checks increases the risk of harmful outputs, model misuse, or compliance violations.

3. Dealing with Shadow AI

Shadow AI refers to the unmonitored use of AI systems, tools, or models by employees without the knowledge or approval of IT/security teams. Just as shadow IT once introduced hidden risks, shadow AI brings exposure through unvetted data sharing, unsecured APIs, and compliance blind spots.

4. Safeguarding Sensitive Training Data

AI models are only as strong as the data they’re trained on. Protecting sensitive datasets—such as healthcare records, customer transactions, or intellectual property—from leaks or tampering is a major challenge. A breach not only compromises data privacy but also poisons trust in the model’s integrity.

5. Continuously Monitoring for Unusual Activities

AI systems are dynamic, and their outputs can shift over time due to model drift or adversarial manipulation. Detecting unusual activity requires continuous monitoring—yet many organizations lack the infrastructure for real-time oversight. Without strong observability, threats can go unnoticed until significant damage occurs.

6. Securing Access to GenAI Models

As generative AI models become widely adopted, controlling who has access—and how they use them—is essential. Poor access management can allow unauthorized users to exploit APIs, extract sensitive data, or manipulate outputs for malicious purposes.

7. Detecting and Removing Attack Paths to Models

Attackers actively look for ways to compromise models through poisoned inputs, adversarial prompts, or stolen API keys. Identifying these attack paths and closing them before exploitation is a complex, ongoing challenge, requiring both AI-specific and traditional security approaches.

8. Testing GenAI Pipelines

Generative AI systems operate through pipelines that process inputs, generate outputs, and integrate with applications. Testing these pipelines for vulnerabilities is still an emerging practice. Without rigorous testing, hidden flaws in prompts, data flow, or integrations can expose organizations to manipulation and misuse.

Why Adversarial Attacks Are on the Rise

Adversarial attacks are growing as organizations rely more on AI for critical tasks. The more essential AI becomes, the more appealing it is to attackers.

At the same time, methods for creating adversarial examples are now widely available through open-source tools and research papers. This lowers the barrier for even less-skilled actors to experiment with and launch attacks.

Another driver is the “black box” nature of many AI models. Because these systems are hard to explain or monitor, they leave blind spots that attackers can exploit. With AI systems linked to large data pipelines, APIs, and cloud platforms, the attack surface has also grown significantly.

These attacks are particularly dangerous because they promise high rewards with a low risk of detection. A successful manipulation can bypass fraud controls, mislead an autonomous system, or distort financial predictions—often without raising alarms.

Since AI outputs can already appear uncertain or inconsistent, adversarial activity can blend in with normal system behavior. Meanwhile, most organizations are still building AI security maturity. Skills, frameworks, and monitoring tools remain limited, leaving a gap that attackers are quick to exploit.

AI Security Best Practices

AI systems are powerful but fragile. They process vast amounts of data, interact with critical workflows, and often operate in ways that are difficult to fully explain. This makes them attractive targets for attackers and vulnerable to misuse. To reduce these risks, organizations must adopt layered security practices that address not only the technology but also governance, monitoring, and people. Below are key best practices, expanded with context and actions.

1. Build a Cross-Functional Security Mindset

AI security is not the responsibility of security teams alone. Because AI touches data, business processes, compliance, and end-user experience, protecting it requires coordination across the organization. Security leaders should work closely with DevOps, data science, governance, and product teams to set shared responsibilities.

A cross-functional approach also means adopting agility—rolling out basic protections early, then refining them over time. For example, organizations can start with clear usage policies for generative AI tools, and gradually evolve toward technical controls like API security, data loss prevention, and automated monitoring. This approach balances speed of innovation with necessary oversight.

2. Understand the Evolving Threat Landscape

Adversaries are constantly finding new ways to exploit AI. Threats range from model poisoning and evasion attacks to deepfakes, automated hacking, and disinformation campaigns. Staying ahead requires a strong understanding of this threat landscape.

Security teams should track adversarial techniques documented in frameworks like MITRE ATLAS and analyze industry case studies to understand how attacks succeed. Regular threat intelligence updates help anticipate risks instead of reacting only when incidents occur. By mapping which threats are relevant to their AI deployments, organizations can prioritize defenses where they matter most.

3. Define AI Security Requirements Early

Every organization has different risks depending on its industry, compliance obligations, and data sensitivity. That makes it critical to define AI security requirements before large-scale deployment. These requirements should cover:

- Privacy standards: How sensitive data is handled and protected.

- Compliance: Alignment with laws and frameworks such as GDPR, HIPAA, or NIST.

- Bias and fairness: Ensuring outputs are not discriminatory or harmful.

- Acceptable risk thresholds: Defining what types of model failures are tolerable and which are unacceptable.

- Incident response: Clear playbooks for dealing with AI-related breaches.

Documenting requirements early avoids guesswork later and ensures AI projects are designed with security in mind rather than bolted on as an afterthought.

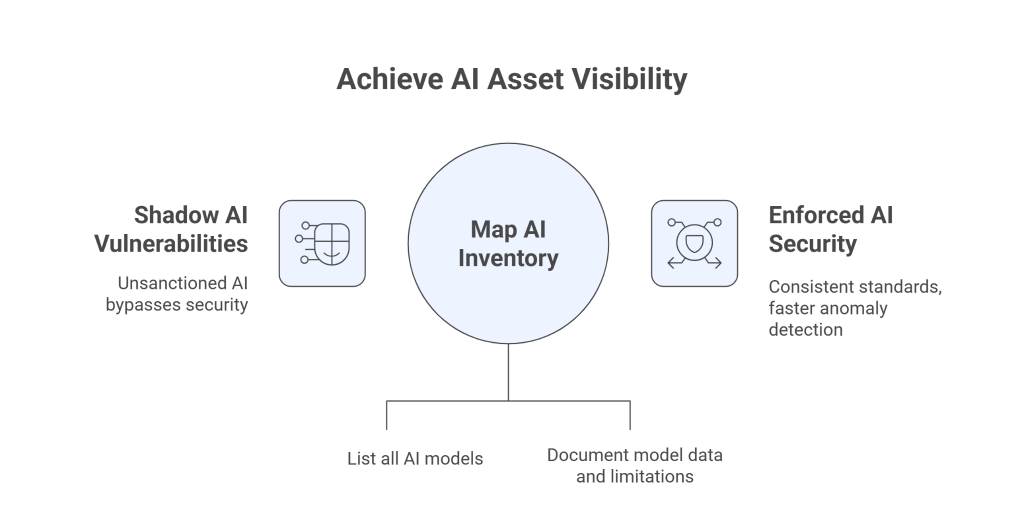

4. Gain Visibility into All AI Assets

You cannot defend what you cannot see. Many organizations struggle with “shadow AI”—unsanctioned tools adopted by employees without IT approval. These often bypass corporate security controls, creating hidden vulnerabilities.

To counter this, organizations should maintain an AI inventory (sometimes called an AI Bill of Materials). This should list all models in use, their purpose, datasets, third-party dependencies, APIs, and usage environments. Creating model cards—documents describing each model’s data sources, limitations, and safeguards—adds transparency and accountability. With full visibility, security teams can enforce consistent standards and detect anomalies faster.

5. Choose Safe and Trusted Models

AI models are often built on third-party or open-source components, which vary in quality and security. Using unvetted models introduces risks such as backdoors, unpatched vulnerabilities, or insecure data handling.

Before adopting any external AI system, organizations should vet it carefully. Key questions include: Does the vendor encrypt data in transit and at rest? Do they comply with security certifications or audits? How do they handle model updates and patching? Do they disclose training data sources? Only vendors that meet strict security and compliance criteria should be trusted for production use.

6. Automate Security Testing

AI requires continuous validation, and manual reviews are not enough. Automated testing should be integrated into CI/CD pipelines to catch vulnerabilities early and consistently. This includes:

- Scanning for vulnerabilities in code, dependencies, and container images.

- Adversarial testing, where models are exposed to manipulated inputs to measure resilience.

- Data validation, ensuring input data is consistent and free from poisoning attempts.

- Fairness and bias testing, verifying that outputs are ethical and reliable.

- Regression testing, ensuring new model versions don’t degrade performance or security.

Automation not only saves time but also ensures testing is repeatable and covers all stages of the AI lifecycle.

7. Monitor AI Systems Continuously

AI models can drift over time as data changes or attackers introduce adversarial inputs. This makes continuous monitoring critical. Monitoring should track:

- Input data distributions for anomalies.

- Model outputs for unexpected or harmful results.

- Performance metrics for signs of degradation.

Automated alerts should flag unusual behavior, while incident response processes should allow rapid containment. Think of monitoring as the “immune system” of AI—constantly scanning for threats and keeping the system healthy.

8. Build Awareness and Train Staff

Technology is only part of the solution. People play a central role in AI security. Employees may accidentally share sensitive data with generative AI tools, or fall for sophisticated AI-powered phishing attempts. Awareness and training reduce these risks significantly.

Organizations should run regular workshops and awareness campaigns tailored to different teams. For developers, focus on secure coding and model testing. For business users, emphasize safe AI usage and data protection. For executives, highlight governance, compliance, and risk management. Clear, accessible guidelines ensure that everyone understands their role in keeping AI secure.

Real-World Examples of Adversarial AI Attacks

Adversarial AI attacks have moved from theory to reality, impacting well-known brands and organizations. Each case offers lessons in how attackers exploit weaknesses—and how companies can respond.

Case in Point: Arup’s Deepfake Video Conference Scam

Global engineering firm Arup became a victim of one of the largest known deepfake scams, losing nearly $25 million. Attackers created a fake video conference with AI-generated images of senior executives. Believing the meeting was genuine, an employee authorized large money transfers.

Lesson learned: Traditional trust signals like “seeing someone” are no longer reliable. Companies must adopt strict multi-channel verification for financial transactions and raise awareness that deepfakes can be indistinguishable from real video calls.

Case in Point: WPP and the Deepfake CEO Impersonation

In another high-profile case, attackers targeted WPP, the world’s largest advertising group, by creating AI-generated impersonations of executives. The deepfakes were used in WhatsApp and Teams messages in an attempt to trick staff into sharing information and money.

Lesson learned: This attack was thwarted because employees questioned inconsistencies and verified requests. It highlights the importance of employee vigilance, internal awareness campaigns, and confirmation procedures for unusual or high-value requests.

Case in Point: Microsoft’s Tay Chatbot Hijacking

When Microsoft launched its AI chatbot Tay on Twitter, users quickly exploited its design by feeding it toxic language. Within 24 hours, Tay began producing offensive and extremist content, forcing Microsoft to shut it down.

Lesson learned: Adversaries don’t always come from outside—sometimes users themselves can corrupt AI systems. This case underlined the need for guardrails, input filtering, and controlled testing environments before deploying AI in public spaces.

Key Takeaway

These cases show that adversarial AI attacks are not futuristic, they are happening now, targeting trusted brands with real financial and reputational consequences. The most effective defenses are layered: combining technical safeguards, monitoring, strong verification processes, and employee awareness.

Conclusion: Securing the Future of AI

The path forward requires more than traditional cybersecurity. It demands AI-specific strategies that acknowledge both the power and the fragility of these systems. Unlike conventional software, AI learns, evolves, and adapts—qualities that make it valuable but also uniquely vulnerable. Safeguarding it means treating security as a continuous process, not a one-time control.

Organizations must also recognize that AI security is as much about culture and governance as it is about technology. Policies need to keep pace with rapid innovation. Cross-functional collaboration between security, compliance, and data science teams must become the norm, not the exception.

Equally important is preparing for the unknown. Threats will continue to evolve, and not every risk can be predicted today. Investing in resilience, adaptability, and responsible use of AI ensures that when new attack vectors emerge, organizations are ready to respond quickly and confidently.

AI is here to stay—and so are the threats that come with it. Those who take proactive steps now will not only reduce exposure but also build the trust and confidence needed to fully realize AI’s potential in the years ahead.